From website:

“PeerMark is a peer review assignment tool. Instructors can create and manage PeerMark assignments that allow students to read, review, and evaluate one or many papers submitted by their classmates. With the advanced options in PeerMark instructors can choose whether the reviews are anonymous or attributed.

The basic stages of the peer review process:

- Instructor creates a Turnitin paper assignment.

- Instructor creates a PeerMark assignment and sets the number of papers students will be required to review, and creates free response and scale questions for students to respond to while reviewing papers.

- Student papers are submitted to the Turnitin assignment.

- On the PeerMark assignment start date, students begin writing peer reviews.

- For each assigned paper, students write reviews by responding to the free response and scale questions.

- Students receive reviews when other students complete them.

- Once the PeerMark assignment due date passes, no more reviews can be written, completed, or edited by the writer”

To use PeerMark in the SBU instance of Brightspace:

Go to the Content area where you want your PeerMark assignment to be located. For example, a module labeled “Week 4” or “PeerMark Assignments”.

Go to the dropdown Menu, “Existing Activities” – > PeerMark.

This pops up a Add Activity window where you can give your assignment a title, instructions, max grade, start date/time, due date/time, release date/time, and the pretty important part, check Enable PeerMark. You will also want to expand the Optional Settings area to set specific Turnitin Settings. (PeerMark is owned by Turnitin, and they are bonded together in the LMS.)

Hit Submit.

Click on the assignment link you just made.

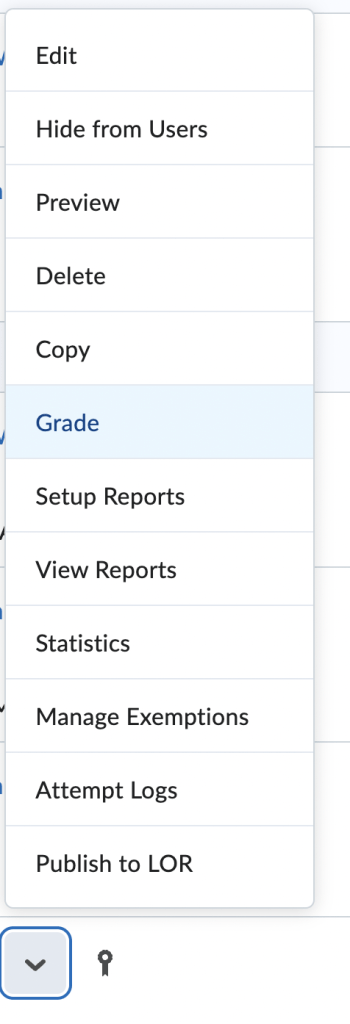

There is a PeerMark dropdown menu. Use that to get to PeerMark Setup.

Now you get to another setup area that looks too much like the last assignment setup area we were just in. I’m not sure why these aren’t all presented at once, but anyway…

You will see the title and instructions, just like you wrote them already, but the Maximum points available is reset to to empty. This is because the other grade was how many points the instructor will be grading the assignment at and this max points is what the peers will be given for completing the review.

The dates, look like the ones you already set – but they are not. These are Students can review from date/time, students can review till date/time and peer feedback available date/time.

There is an additional options area here as well, which has things like: Award maximum points on review (if you have three questions for them to answer during the review and 100 points total, they will get 1/3 of 100 for each question that they answer while reviewing.) Also settings regarding anonymity . who can review, how many papers they will get to review, and whether they can review their own works.

Save and Continue.

Now click on the PeerMark Questions tab.

You will setup specific questions that you want the peer reviewers to work off of. You can make up your own questions, Add from a Library of premade sample questions, make your own library, and Delete your own libraries.

When you make your own question, you can choose from a free response type or a scale type.

When creating a Free Response type, you also indicate the minimum answer length.

The Scale type has size (2-5) and the Lowest and Highest are the text prompts for what the min value and max value represent… example Perfect! /Did Not Meet Expectations.

This will leave you with a list of the questions you are using, and you can change the order they are in by clicking Reorder Questions, dragging them around and then clicking Save Order.

Features (?):

Assignments created in this manner will not show up under the Assignments area of Brightspace

The Turnitin/Peermark assignments will show up in the Calendar.

Grades for finishing the main assignment, will transfer to Grades.

The PeerMark grades (what the student get for completing a review), will not automatically show up in Grades. Instructors will need to do that manually via copy and paste.

Here is a pdf with another school’s directions ( PeerMark_manual ) on PeerMark which includes at the end some information about how the students actually do the PeerMark review. I think it is pretty straight forward, but in case you need it, it is there.