Experimental Facilities

Current experimental facilities at the laboratory include four engine test cells equipped with active dynamometers. Current research engines include two Ricardo Hydra single-cylinder units, one equipped with a production diesel engine head, and the second one equipped with a spark-ignition engine head that features a Fully-Flexible Valve Actuation (FFVA) system manufactured by Sturman Industries. The FFVA head does not have traditional camshafts to control the valve timing. Instead, the valves are actuated by an electro-hydraulic system. In addition, the laboratory includes a spark-ignited Cooperative Fuels Research (CFR) engine and a prototype, single-cylinder, free-piston linear alternator. Emissions are measured using two Horiba MEXA motor exhaust gas analyzers (7100DEGR and 7500DEGR). All engines are fully instrumented with Kistler cylinder pressure transducers, as well as high-speed and low-speed data acquisition systems from National Instruments and AVL.

Fully-Instrumented Cooperative Fuels Research (CFR) Engine with Variable Compression Ratio Capabilities

Fully-Instrumented Cooperative Fuels Research (CFR) Engine with Variable Compression Ratio Capabilities

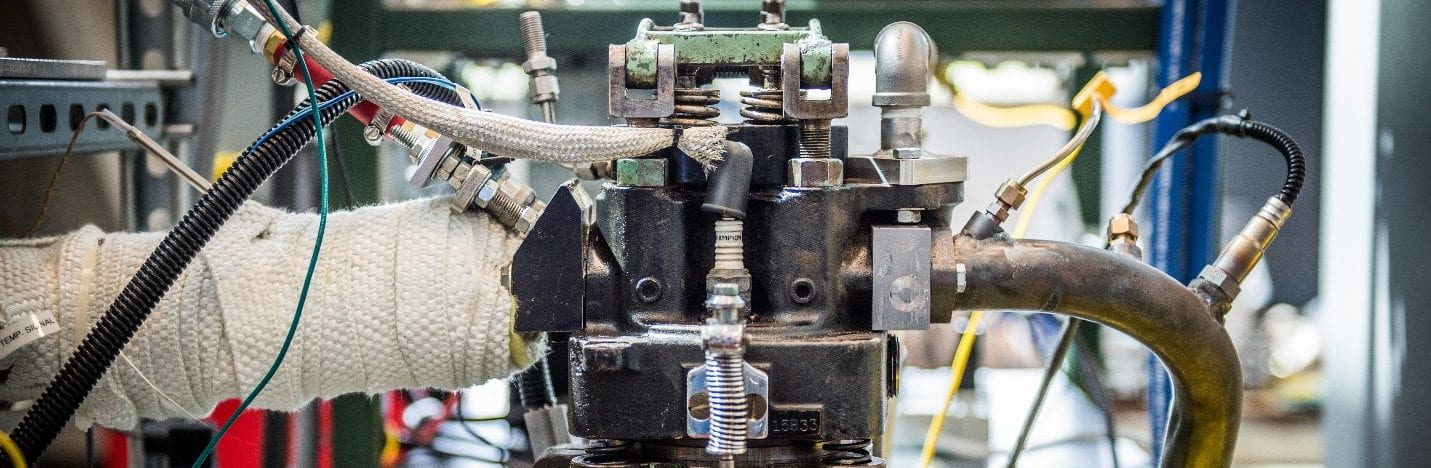

Fully-Instrumented Ricardo Hydra Engine Block and a Production Diesel Head complete with a Port and Direct Fuel Injector to Enable Dual-Fuel Investigations

Computing Capabilities

The research conducted within the group involves a large component of computational work, including Computational Fluid Dynamics (CFD) modeling with detailed chemical kinetics, single-zone combustion modeling, and system-level engine and vehicle modeling using various software platforms and packages. To conduct these computational research efforts, High-Performance Computing (HPC) facilities are required. Current computational resources include two high-performance computing clusters at the Institute for Advanced Computational Science (IACS) that have dedicated professional system administrators responsible for maintaining a secure, highly-available, and high-quality environment. These resources include:

- LI-red (peak speed ~100 TFLOP/s) has 100 compute nodes, each with 128 GB memory, 24 Intel Haswell cores at 2.6 GHz, which are used via a batch system; 1 large memory node (3 TB memory, 72 Intel Haswell cores at 2.1 GHz) available for interactive use; an FDR (56gbps) InfiniBand network; and 0.5 Petabyte IBM GPFS shared file system. This system is optimized for HPC applications and is primarily for research use.

- SeaWulf (peak speed ~240 TFLOP/s) has 165 compute nodes (each with 128 GB memory, 28 Intel Haswell cores at 2.0 GHz) that are used via any of batch, cloud, and web interfaces; 8 nodes have 4 NVIDIA K80 GPUs (for a total of 32); a QDR (40gbps) InfiniBand network; and very fast 1.1 Petabyte DDN GPFS shared file system (extensible to 3+ Petabytes). This system is optimized for data-intensive applications and is available for research and education purposes.

In addition to the high-performance computing resources described above, the research group has three high-end workstations that are used locally for computational work, post-processing, and visualization.

Intake Flow Streamlines Determined by Computational Fluid Dynamics (CFD) Modeling

Intake Flow Streamlines Determined by Computational Fluid Dynamics (CFD) Modeling

Institute for Advanced Computational Science ribbon cutting ceremony