The massively parallel architecture of GPU accelerators is being harnessed to expedite computational workloads in cutting-edge scientific research. More researchers and developers desire to port their applications to GPU-based clusters, due to their extensive parallelism and energy efficiency. This is clearly evident in the report by Intersect360 Research about top 50 HPC applications.

Unfortunately porting and writing applications for accelerators, such as GPUs, requires extensive knowledge of the underlying architecture, the application/algorithm and the interfacing programming model (e.g. CUDA). Moreover, (re-)writing kernels using lower-level programming models such as CUDA and OpenCL is a burden for application scientists. Clearly, this impacts productivity. A more appealing strategy is to leverage a programming model layered on directive-based optimization: OpenMP. Since version 4.5, OpenMP provides device offloading capabilities, and its most recent specification (November 2018) significantly extends its accelerator functionality.

Despite the variety of programming models available, it is still quite challenging to optimize large-scale applications consisting of tens-to-hundreds of thousands of lines of code. Effectively, “pragmatizing” each kernel is a repetitive and complex task. An example of this type of applications is the Grid library, which is a C++ data-parallel library, consisting roughly 40K lines of code. It is used in applications of high-energy physics, and in particular, for Lattice Quantum Chromo-dynamics (QCD). Grid library provides a core set of mathematical operators with several back-end implementations. However, most of the operations are small, don’t have enough computational work to justify a GPU execution, are deeply buried in the library specification, or are evenly spread through the application using Grid. Thus, we seek to design and build a compiler framework that can automatically and profitably offload regions of code with these characteristics.

To address these issues, our framework performs the following tasks:

1. detect potentially offloadable kernel;

2. identify common code invocation patterns arising in a program;

3. evaluate several kernel variants resulting from fusion/decomposition of kernels;

4. evaluate the profitability of each kernel variant via a novel and adaptive cost model;

5. insert pertinent compiler directives to perform offloading.

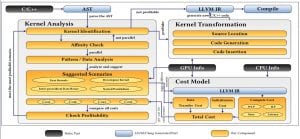

The overall flow of our approach is portrayed in Figure 1. We parse the AST to identify affine kernels and analyze it to determine various scenarios and determine the most profitable scenario of them all. If offloading of a region is found profitable we automatically insert pertinent directives to offload the region using OpenMP. We output the newly generated code supporting GPU offloading.

Recurring Function Call Patterns. The driving principle of our work resides in generating numerous kernel variants that result from fusing and/or decomposing existing function bodies. We analyze the program’s call graph to determine the “proximity” of kernel calls and evaluate the degree of data reuse among adjacent or “close-enough” calls. When such patterns are detected we generate several scenarios, until producing a single variant whose footprint is near the capacity of the GPU.

Profitability of a Kernel Variant. To compare the potential performance among the various kernel variants generated, we are designing an adaptive cost model. The precision of this cost model will depend upon the analyzability of the program. For instance, if the kernel body is affine and fits the standard requirements of the polyhedral model, we can detect the exact set of live-in and live-out data, compute the number of parallel loop dimensions and easily extract its operational intensity. If however, a kernel doesn’t fit into the standard requirements, over-approximation techniques can be leveraged to compute less precise information. Being able to compute the live-in and live-out dataset of kernels is useful when the kernel parameters are complex or deep data structures. We are also building upon existing cost models like Baghsorkhi etal’s model which proposed a workflow graph (WFG)-based analytical model and Hong etal’s recent model which propose the use of abstract kernel emulations to help identify the performance bottlenecks of a GPU program execution. Along with these, we introduce GPU initialization and data transfer cost to the model.

Automatic Kernel Generation. Once a profitable kernel variant is detected, we proceed to generate its body. Figure 2 exemplifies two such variants targeted by us. As discussed previously, if the involved kernels are affine (or close to affine) we can further optimize the code for locality, multi-level parallelism and automatically generate the necessary set of pragmas to enable the offloading. Compilers such as Polly and PoCC, with their advanced scheduling frameworks and code generation capabilities, are currently being considered for this task.

To conclude, GPUs are increasingly important to the HPC industry, but GPU offloading is a strenuous and laborious job. Our research aims at achieving automatic source-to-source codes translation to support GPU offloading, by analyzing several code variants resulting from kernel fusion/decomposition.