Members of the DoIT staff met on Monday, March 3, to discuss improvements to the System Status monitoring and reporting process. Historically, there have been technical issues with the DoIT website due to caching that delayed the publication of system status entries which ultimately was leading to the same status events being reported multiple times.

Members of the DoIT staff met on Monday, March 3, to discuss improvements to the System Status monitoring and reporting process. Historically, there have been technical issues with the DoIT website due to caching that delayed the publication of system status entries which ultimately was leading to the same status events being reported multiple times.

Richard von Rauchhaupt, DoIT’s web architect, recently implemented some back-end changes to the site which should now allow for immediate publication of these system status entries by purging the cache of the home page and service pages whenever a system status entry is created. In addition, he added a new block to the system status creation screen which shows any currently-affected systems. It is our hope that this will reduce the duplication of status events since we have representatives from Systems and Operations, Client Support, Data Network Services, and TLT adding service disruptions and outages.

The group agreed it is better to have multiple entries for an outage, rather than not reporting it at all. In short, when it doubt, someone from the DoIT staff will be posting alerts into our Drupal content management system.

It was also recommended that our system status managers publish a reasonably detailed description of the outage or service disruption and an acknowledgement when a certain team has taken ownership of a problem and is in the middle of working on a solution. Our team has recommitted itself to periodically updating system status messages with new details (when applicable) and will attempt to provide time estimates for system resolution as they become available.

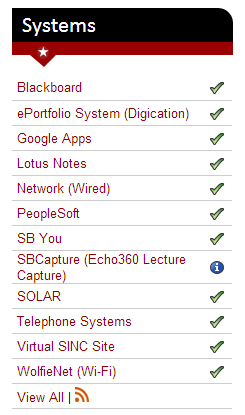

For major systems used by the majority of our campus community (PeopleSoft/SOLAR, Blackboard, Google Apps, WolfieNet, etc.), it was also agreed upon that our system status managers post the system status to the SBU DoIT Twitter feed (@SBUDoIT or #SBUDoIT). This is automatically done if the “Post to Twitter” checkbox is checked in Drupal. Our system status managers only need to post it to Twitter once per outage since the URL displayed on Twitter will reflect any updated messages throughout an event.

During the next few months, DoIT’s web team will be looking at building out some basic metrics related to system downtime reported through system status entries. This will give us a baseline on how available our systems tend to be based on reports which can be compared to other benchmarks to see if we are catching all system status events, and to see which systems are unstable. These metrics will rely on accurate reporting and resolution of system status events.

Our team agreed that if a status event is resolved, but there are potential side effects or new user instructions, the disruption or outage will be closed and a new “informational” entry will be created. This will allow for long-term notifications to our users while still allowing us to accurately track the outage and downtime.

Finally, when a system is down during a scheduled outage, we will now display a red “X” instead of the calendar icon (if an event was originally entered as “scheduled maintenance”) on the home page and system status screen. This will more accurately reflect when a service is down. In the system status archive, we will still show the calendar so that users know that most of our outages are planned and due to required system maintenance.

We welcome any feedback on these changes and certainly hope this makes things clearer for our IT Partners and the University community as a whole.