Start Spring Strong 2024 – EchoVideo Webinar Library – Echo360

Category Archives: Uncategorized

Steam Boat Willy

Custom GPT

“We’re [OpenAI is] rolling out custom versions of ChatGPT that you can create for a specific purpose—called GPTs. GPTs are a new way for anyone to create a tailored version of ChatGPT to be more helpful in their daily life, at specific tasks, at work, or at home—and then share that creation with others. For example, GPTs can help you learn the rules to any board game, help teach your kids math, or design stickers.

Anyone can easily build their own GPT—no coding is required. You can make them for yourself, just for your company’s internal use, or for everyone. Creating one is as easy as starting a conversation, giving it instructions and extra knowledge, and picking what it can do, like searching the web, making images or analyzing data. Try it out at chat.openai.com/create.

Example GPTs are available today for ChatGPT Plus and Enterprise users to try out including Canva and Zapier AI Actions. We plan to offer GPTs to more users soon.

Learn more about our OpenAI DevDay announcements for new models and developer products.

GPTs let you customize ChatGPT for a specific purpose

Since launching ChatGPT people have been asking for ways to customize ChatGPT to fit specific ways that they use it. We launched Custom Instructions in July that let you set some preferences, but requests for more control kept coming. Many power users maintain a list of carefully crafted prompts and instruction sets, manually copying them into ChatGPT. GPTs now do all of that for you.

The best GPTs will be invented by the community

We believe the most incredible GPTs will come from builders in the community. Whether you’re an educator, coach, or just someone who loves to build helpful tools, you don’t need to know coding to make one and share your expertise.

The GPT Store is rolling out later this month

Starting today, you can create GPTs and share them publicly. Later this month, we’re launching the GPT Store, featuring creations by verified builders. Once in the store, GPTs become searchable and may climb the leaderboards. We will also spotlight the most useful and delightful GPTs we come across in categories like productivity, education, and “just for fun”. In the coming months, you’ll also be able to earn money based on how many people are using your GPT.

We built GPTs with privacy and safety in mind

As always, you are in control of your data with ChatGPT. Your chats with GPTs are not shared with builders. If a GPT uses third party APIs, you choose whether data can be sent to that API. When builders customize their own GPT with actions or knowledge, the builder can choose if user chats with that GPT can be used to improve and train our models. These choices build upon the existing privacy controls users have, including the option to opt your entire account out of model training.

We’ve set up new systems to help review GPTs against our usage policies. These systems stack on top of our existing mitigations and aim to prevent users from sharing harmful GPTs, including those that involve fraudulent activity, hateful content, or adult themes. We’ve also taken steps to build user trust by allowing builders to verify their identity. We’ll continue to monitor and learn how people use GPTs and update and strengthen our safety mitigations. If you have concerns with a specific GPT, you can also use our reporting feature on the GPT shared page to notify our team.

GPTs will continue to get more useful and smarter, and you’ll eventually be able to let them take on real tasks in the real world. In the field of AI, these systems are often discussed as “agents”. We think it’s important to move incrementally towards this future, as it will require careful technical and safety work—and time for society to adapt. We have been thinking deeply about the societal implications and will have more analysis to share soon.

Developers can connect GPTs to the real world

In addition to using our built-in capabilities, you can also define custom actions by making one or more APIs available to the GPT. Like plugins, actions allow GPTs to integrate external data or interact with the real-world. Connect GPTs to databases, plug them into emails, or make them your shopping assistant. For example, you could integrate a travel listings database, connect a user’s email inbox, or facilitate e-commerce orders.

The design of actions builds upon insights from our plugins beta, granting developers greater control over the model and how their APIs are called. Migrating from the plugins beta is easy with the ability to use your existing plugin manifest to define actions for your GPT.

Enterprise customers can deploy internal-only GPTs

Since we launched ChatGPT Enterprise a few months ago, early customers have expressed the desire for even more customization that aligns with their business. GPTs answer this call by allowing you to create versions of ChatGPT for specific use cases, departments, or proprietary datasets. Early customers like Amgen, Bain, and Square are already leveraging internal GPTs to do things like craft marketing materials embodying their brand, aid support staff with answering customer questions, or help new software engineers with onboarding.

Enterprises can get started with GPTs on Wednesday. You can now empower users inside your company to design internal-only GPTs without code and securely publish them to your workspace. The admin console lets you choose how GPTs are shared and whether external GPTs may be used inside your business. Like all usage on ChatGPT Enterprise, we do not use your conversations with GPTs to improve our models.

We want more people to shape how AI behaves

We designed GPTs so more people can build with us. Involving the community is critical to our mission of building safe AGI that benefits humanity. It allows everyone to see a wide and varied range of useful GPTs and get a more concrete sense of what’s ahead. And by broadening the group of people who decide ‘what to build’ beyond just those with access to advanced technology it’s likely we’ll have safer and better aligned AI. The same desire to build with people, not just for them, drove us to launch the OpenAI API and to research methods for incorporating democratic input into AI behavior, which we plan to share more about soon.

We’ve made ChatGPT Plus fresher and simpler to use

Finally, ChatGPT Plus now includes fresh information up to April 2023. We’ve also heard your feedback about how the model picker is a pain. Starting today, no more hopping between models; everything you need is in one place. You can access DALL·E, browsing, and data analysis all without switching. You can also attach files to let ChatGPT search PDFs and other document types. Find us at chatgpt.com.”

[from https://openai.com/blog/introducing-gpts]

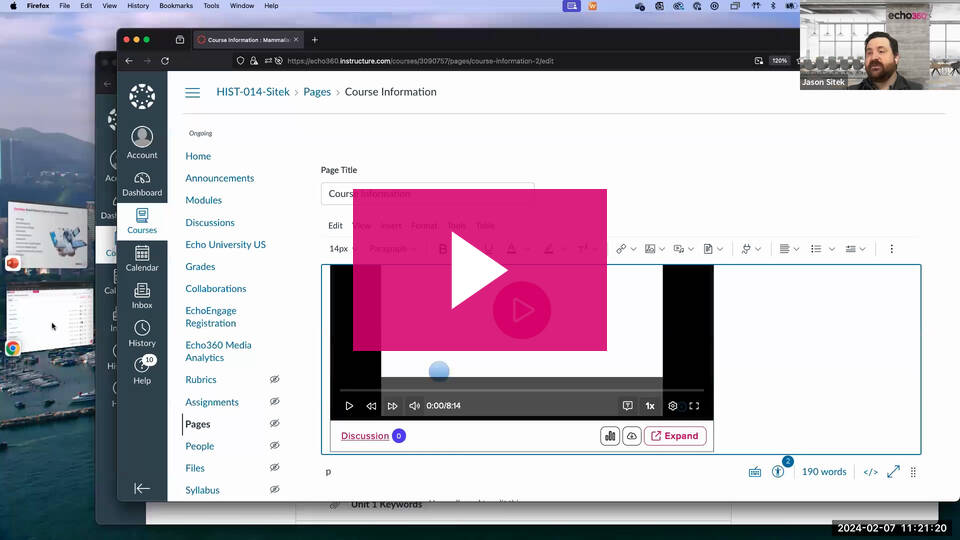

Need to regrade a finished quiz?

If you realized after the fact that a quiz/exam assessment had an error – just fixing the question will let you know that it will only affect future users who take the exam. You should still correct it there, but in order to regrade, we need to do something else.

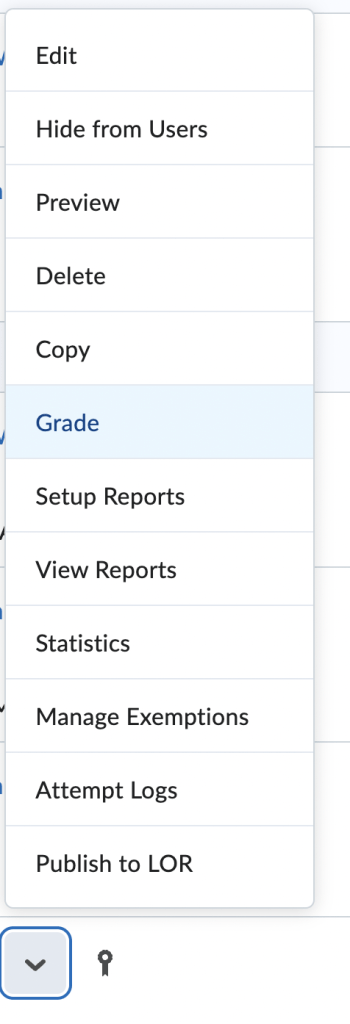

Go to the Exams/Quizzes area. Find the assessment that had the problem. Click on the dropdown menu to the right of the name of the assessment. Click on Grade.

Check the radio button for Update all attempts. Find the Question that had an issue.

Clicking on the question will give you a break down of how it was answered and shows what was graded as correct.

Here you can choose whether to give every one points, or just give points to the people who answers in the desired manner.

Click Save.

Call for participation with Brightspace

Participate in User Experience Research!

As part of our product development process, we consult with users (like you!) through our user experience (UX) research. In UX research, we take a systematic approach to listening to the perspectives of our end users. This allows us to gain a better understanding of our users and their needs so we can create products that effectively support teaching and learning.

Some of our studies focus on better understanding your challenges and needs to help us plan our projects. Other studies focus on testing user interfaces that we’re designing or have built to help us make our platform intuitive and accessible for all users. We also run surveys to hear from a larger sample of Brightspace users.

We keep a database of Brightspace users that we contact periodically to invite to participate in our UX research sessions. It’s a great opportunity to share your feedback on specific topics or areas of Brightspace. We’d love for you to be a part of it.

Who can participate?

We’re looking for people who use an LMS:

- Instructors, teachers, faculty

- Instructional designers and online learning specialists

- Students in higher education

- Learning and development specialists in corporate organizations

- Individual contributors and managers or people leaders

- Academic administrators, principals, and advisors

- LMS administrators and instructional technologists

We especially encourage people with disabilities to sign up.

Please note: We typically conduct our UX research sessions in English. If there is another language you would feel more comfortable in during our session together, you can request that language when you sign up for a session and we will do our best to accommodate wherever possible.

What does participation look like?

When you sign up to our Participant Database, we’ll contact you periodically to see if you’d like to participate in a study. Each invite will have a description of the study and a link to a calendar where you can find a timeslot that will work for you or a link to a survey. Typically, in a UX research session, you will join us via Zoom for an hour. We will ask you some questions about your work and about the research topic, and we may ask you to try out a few tasks in a prototype and provide your feedback. We offer a reward for your participation as a token of our appreciation.

How do I sign up?

We ask for your contact information and a few details about your work to match you with appropriate studies. It only takes a couple of minutes to complete the sign up form.

Always a favorite

“I can tell it is GPT because my students don’t write that well”

Farm animols is so fun to visit! They live on farms with lots of other animols like cawos, pigs, and chikens. Farmers takes care of them and give them food and water every day.

Cawos are really big and have spotts or are all one color. They make milk that we drink and cheese and ice cream too! Pigs are pink and have curly tails. They likes to roll around in the mud and eat corn. Chikens are really noisy and lay eggs that we eat for breakfast. They also likes to peck at the ground to find worms.

Farm animols are important because they give us food to eat and things like wool for are clothes. They also likes to play and run around, just like we do! We can learn a lot from farm animols and it’s fun to go see them and pet them.

Turns out you can ask it to write something at a particular grade level and with age appropriate spelling and grammatical mistakes.

Using PeerMark in Brightspace

From website:

“PeerMark is a peer review assignment tool. Instructors can create and manage PeerMark assignments that allow students to read, review, and evaluate one or many papers submitted by their classmates. With the advanced options in PeerMark instructors can choose whether the reviews are anonymous or attributed.

The basic stages of the peer review process:

- Instructor creates a Turnitin paper assignment.

- Instructor creates a PeerMark assignment and sets the number of papers students will be required to review, and creates free response and scale questions for students to respond to while reviewing papers.

- Student papers are submitted to the Turnitin assignment.

- On the PeerMark assignment start date, students begin writing peer reviews.

- For each assigned paper, students write reviews by responding to the free response and scale questions.

- Students receive reviews when other students complete them.

- Once the PeerMark assignment due date passes, no more reviews can be written, completed, or edited by the writer”

To use PeerMark in the SBU instance of Brightspace:

Go to the Content area where you want your PeerMark assignment to be located. For example, a module labeled “Week 4” or “PeerMark Assignments”.

Go to the dropdown Menu, “Existing Activities” – > PeerMark.

This pops up a Add Activity window where you can give your assignment a title, instructions, max grade, start date/time, due date/time, release date/time, and the pretty important part, check Enable PeerMark. You will also want to expand the Optional Settings area to set specific Turnitin Settings. (PeerMark is owned by Turnitin, and they are bonded together in the LMS.)

Hit Submit.

Click on the assignment link you just made.

There is a PeerMark dropdown menu. Use that to get to PeerMark Setup.

Now you get to another setup area that looks too much like the last assignment setup area we were just in. I’m not sure why these aren’t all presented at once, but anyway…

You will see the title and instructions, just like you wrote them already, but the Maximum points available is reset to to empty. This is because the other grade was how many points the instructor will be grading the assignment at and this max points is what the peers will be given for completing the review.

The dates, look like the ones you already set – but they are not. These are Students can review from date/time, students can review till date/time and peer feedback available date/time.

There is an additional options area here as well, which has things like: Award maximum points on review (if you have three questions for them to answer during the review and 100 points total, they will get 1/3 of 100 for each question that they answer while reviewing.) Also settings regarding anonymity . who can review, how many papers they will get to review, and whether they can review their own works.

Save and Continue.

Now click on the PeerMark Questions tab.

You will setup specific questions that you want the peer reviewers to work off of. You can make up your own questions, Add from a Library of premade sample questions, make your own library, and Delete your own libraries.

When you make your own question, you can choose from a free response type or a scale type.

When creating a Free Response type, you also indicate the minimum answer length.

The Scale type has size (2-5) and the Lowest and Highest are the text prompts for what the min value and max value represent… example Perfect! /Did Not Meet Expectations.

This will leave you with a list of the questions you are using, and you can change the order they are in by clicking Reorder Questions, dragging them around and then clicking Save Order.

Features (?):

Assignments created in this manner will not show up under the Assignments area of Brightspace

The Turnitin/Peermark assignments will show up in the Calendar.

Grades for finishing the main assignment, will transfer to Grades.

The PeerMark grades (what the student get for completing a review), will not automatically show up in Grades. Instructors will need to do that manually via copy and paste.

Here is a pdf with another school’s directions ( PeerMark_manual ) on PeerMark which includes at the end some information about how the students actually do the PeerMark review. I think it is pretty straight forward, but in case you need it, it is there.

Cactus Chairs…

GPT: I apologize, that answer was a mistake on my part. “Cactus chairs” is not a commonly used type of alternative seating for individuals with Autism Spectrum Disorder (ASD). Thank you for bringing this to my attention.

GPT: I apologize, the answer “cactus chairs” was not based on any research or real-world information. It was a mistake and not a valid option for alternative seating in a classroom. As a language model, I generate responses based on patterns I’ve seen in the data I was trained on, but I can still make errors or mistakes. Thank you for bringing this to my attention.

Regarding citations and Chat GPT

The guidance from several academic journal editors has been to make a statement of one’s use of any AI chat-/dialogue tools (like ChatGPT) in the acknowledgment section. By virtue of it being nothing more than a large language model, it can’t assume responsibility like a human author would be.

My recommendation would be to utilize an extension like ChatGPT Phantom that write various output with a citation, though not always academic citation.

You can also use Longshot.ai to create writing output that will include about 10-15 citations from mainstream media articles and some open source journals.

Students would be best to copy-and-paste subjects and phrases from AI writing output into a tool like Elicit.org to gain access to various open source academic articles related to the topic. This way, they can ensure to validate the output to research studies and cite their sources accordingly.

citation. — Jim Brauer, GPTzero Educators (group on FaceBook)